Does the behavior of searchers impact the ranking of a web page? For instance, if I am searching for XYZ and the current #1 is a review page, but the real intent of the search is for a product page, will enough users be able to impact the search results purely by choosing the product page instead of the review page?

Google's official opinion is that this will not impact rankings as far as I can tell.

12 👍🏽1246 💬🗨

📰👈

Google have never been asked quite that question. Instead people generally waste their time asking if the specific page clicked will rank higher.

What you talk about here, determining query intent based on notable and specific preferences for certain types of pages (not specific pages), is exactly what Click Tracking on SERPs is used for, and precisely how Google tweak the algorithms for query intent. RankBrain is a specific machine-learning component of Google that bypasses some of the need for Click Through Rate (CTR) by looking to see if a query type they don't have CTR data for closely resembles one that they do.

Just to add, where there is no clear single intent, but rather several different intentions, again CTR help spot this and can create a Search Engine Result Page (SERP) that enforces a mix of content and interpretations.

That's exactly what I've read from you and Bill and from the patent info. I think I conflated the two different questions.

It's obvious they match rankings to intent, but can you use fake intent on a small enough keyword to alter the search results? Or is it too difficult to trick Google?

Thanks for your answer

Ammon Johns 🎓 » Andrew

That's where it gets complex pretty fast. It is all dependent on the search volume, and the search term. i.e. if a query has several thousand searches per day, you'd have to assume needing at least 10% of that volume, all on unique IP and machines in the dataset, consistently over months to force a lasting change.

You can force a temporary change much more easily, but this still depends on unique IP addresses and fooling the tracking into being sure these are unique users, and while it can kick in within hours, the effects drop off very quickly if the trends reverse a few days or a week or so later. I've never had the resources to test it, but my theory is you'd need to run continuous searches for at least a month to get any kind of lasting change, and even that would probably only last a month of the back-to-normal patterns.

Where certain terms may have a broader classification, i.e. service terms triggering local, you'd likely need a much, much higher volume and sustain.

Keith L Evans 🎓

My non-sensical- non-technical answer: We all know G gets queries wrong. And then their Artificial Intelligence (AI) learns the wrong actions of users.

And we all know strong signals like links and on-page can greatly influence rankings. (and we manipulate these signals)– which now makes it harder for G to really determine the better answer.

Because G's data can show someone went to the Review page and then clicked to the Product page which satisfied the user. So keep the Review page at #1.

In the eye of G- good is really 'good enough for now'

IMPORTANT: Nearly all query results suck. We are only seeing pages that suck the least. A much better page is sitting back on Page 2, 3 or 4, praying to get a kick up.

Leon

There is a unique AI problem Google is trying to solve here, called "learning to rank". There are only a few ways to efficiently solve this problem and all include use of Click data to validate and modify search results. I don't know limitations or how much of an impact one can have on a SERP but in theory it's possible to influence them. Here's a nice video on the subject https://youtu.be/2UpLin5T_E4

Text Classification 5: Learning to Rank

Nick

I think so.

Snow Teeth Whitening started ranking for snow after like 5 years.

My theory is not enough searchers we're looking for Snow And wanted teeth whitening until recently.

How else could Google do this across trillions of searches if not User Experience (UX)

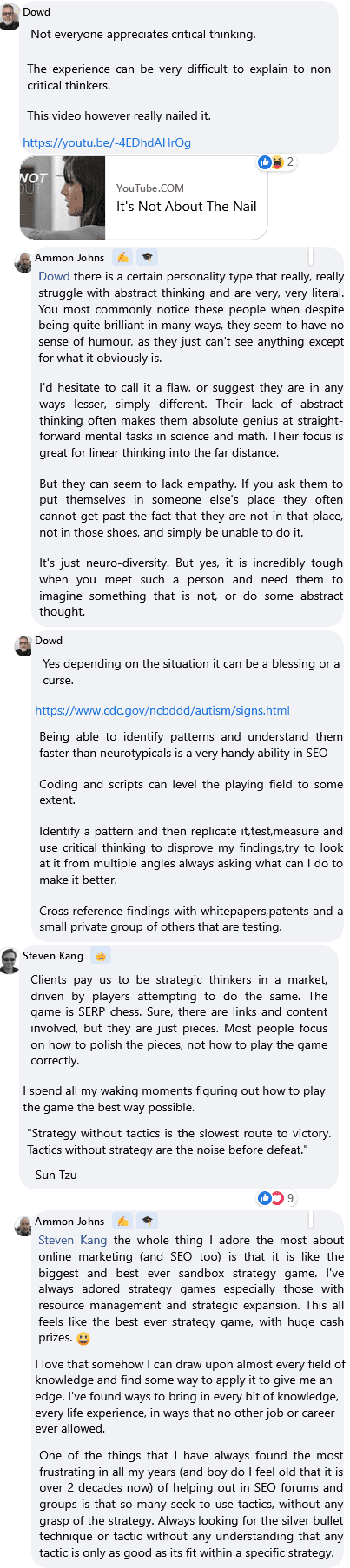

That sort of question – how else – is always a fun thought experiment, and a part of critical thinking in Search Engine Optimization (SEO). There are certainly ways that entity recognition based on the Knowledge Graph could be used. But then the even more important question becomes *why else*, because if there is no search demand for a particular entity, all the how in the world isn't going to improve anyone's user experience of the Search Engine Result Page (SERP)

Nick » Ammon Johns

Agreed that it is interesting to consider how Google could evaluate intent.

In your knowledge graph example, what do you think the mechanism could have been for Snow Teeth Whitening to finally hit P1 for 'Snow'

I think I saw a G patent for analyzing user response to SERPs with the front-facing camera on a device.

Ammon Johns 🎓 » Nick

If* the Knowledge Graph entity were being used, it would *probably* be in combination with Google deciding to look for brands that match words in queries. Now, let me immediately underline for anyone following along that this is entirely hypothetical, a thought exercise, and I am feeling very confident that Google are NOT doing this. This is a 'what if' thought experiment to consider a wider range of alternatives, nothing more.

Back to you, Nick, I can't see Google actually doing this, not with so many exact match domains, and with some of the worst products and services often being the most likely to use generic or action based words, while most of the quality companies use words that have nothing to do with the queries that would apply to their products – Amazon, Apple, Jaguar, Ford, all brands that use a word that has nothing to do with what they offer, but are words commonly used in queries that have nothing to do with their brand. (River, Fruit, Big Cat, Ways to cross a river).

📰👈

Kathy

Are you asking, can CTR trump engagement with enough clicks? Your words "purely by choosing" seems to indicate that.

If the latter, we have none other than Brian Dean to answer this question. Many of us probably remember his story about ranking at the top for his article on "How to get high in google" for "How to get high"queries. Google got it wrong initially and so did the readers when they clicked on it. But eventually his post dropped except for the more relevant queries because the engagement sucked is our guess. It wasn't because clicks dropped off. So then we can assume that clicks play at least a lesser role.

I've never liked the term 'engagement' in the context of rankings because people confuse engagement of the page/site (which Google don't use) and engagement with the search engine, which they absolutely do use.

Bouncing from a page means nothing. Bouncing back to the SERP means a lot more, most especially that whether or not the result they clicked before bouncing back was good, it didn't satisfy, and didn't end the session.

But then people also assume that a bounce back to SERP always means the page was bad. It doesn't. It may have been a great result, and the user simply wants a second confirming opinion, or to compare prices elsewhere in the same SERP, etc.

Google look at the total session, from the first query to the last click, including any search query refinements they made, or secondary searches. It's that whole session that Google want to make faster and smoother.

With machine learning, it might even be technically possible for a not so great result to be promoted because of 'anchoring effect', i.e. a relatively poor first option may make the following options seem even better by comparison, and so lead to a quicker decision, and thus faster overall satisfaction… How's that for mind-boggling?

Kathy » Ammon Johns

Thanks for the engagement explanation! Pogosticking is definitely a sign of poor engagement that Google can read. And a bounce is more often than not a great signal! But how are you sure Google doesn't use the time on page metric as an engagement signal? And because we can also see and track how far they scroll or how long they watched a video, why isn't Google using those?

Your last paragraph boggled my mind. I need to go ponder it. But here's one for you.

I have a client experience that has led me to believe/suspect that a local business in a very competitive space can rank for their main keyword on a pillar page simply by engagement on a subpage that links to it, whether or not anyone actually clicked that internal link (meaning that page gets more bounces than not). I know this seems contradictory to what is accepted in the SEO community, but I don't know what else to attribute a gradual rank increase to. This client has had no SEO done for over a year now and the only thing really "cooking" on his site are a couple of somewhat related, but popular blog posts. Not enough to push him page 1, but enough to get him into the striking zone. Sounds crazy right?

Ammon Johns 🎓 » Kathy

Time on Search Engine Result Page (SERP), including returns and re-search/refinement, as a negative. Remember, Google's new ideal is often the zero-click SERP from answer boxes and knowledge panels.

The more time overall, looking at all the pages you visit, all the returns to SERP, all the use of a search engine in a session, then technically the worse that SERP was for the user. The user wants to find what they were searching for quickly and be done.

Customer satisfaction – having that search user continue to believe that Google is the best and so continuing to use it in future, without trying rivals – is a major business goal for Google. So, all the Click Through Rate (CTR) data tracking on a SERP that Google do, all the split-testing of one SERP against another that they do every single day, is all about finding which version of their algorithms, query rewrites, and display items, resulted in the fastest customer satisfaction. Where satisfaction is marked by the end of all SERP activity in that session.

Pogo-sticking is NOT a negative signal for the site or page. It isn't necessarily even a negative for the algo that ranked that page. Not if even with that bounce back to SERP the *total* search session led to satisfaction sooner.

The most pogo-sticking naturally happens to the top ranked page. People click it, see what is there, then click back to SERP to compare it to other results – ESPECIALLY in ecommerce where they hope to find a better offer, whether or not that first offer was great.

The most pogo-sticking is always on the top ranked, and the lower you go down the ranking, the less pogo-sticking, until you reach the pages that have no pogo-sticking at all because nobody clicked on them at all. 🙂

If someone tells you that pogo-sticking is a negative ranking factor, they have no clue how people use search and compare results.

Time on page, scroll depth, etc are great tools for the website to use to judge its own quality, but terrible, terrible metrics otherwise.

Take scroll-depth. As a human you can look at a page and hopefully know what a customer is meant to do on that page, and whether them scrolling is more likely to mean they were reading, or that they were having trouble finding the answer they were looking for. But even then there are so many, many cases I've seen of owner bias, where what they think is 'engagement' is user frustration. Did they scroll to the bottom because they were NOT satisfied sooner?

Time on page is an awful metric generally. For a start, you have to differentiate between a page that is the active tab in a browser, and a page with is in a tab they aren't looking at. I'm sure I am far from the only person who sometimes opens a tab, and it sits open, that page reporting it is loaded, for maybe 30-50 minutes, and then whether running out of time, or called away for something else, I abandon the session having never opened that tab *in view* even once. But time on page would show the 30-50 minutes it, when in truth I'd never so much as glimpsed it.

When I'm tracking engagement for a client, for their own purposes, I often use scripting that only runs the timer while that tab is the primary, and if there is no key-pressing or mouse activity for 60 seconds, or if the tab focus is shifted (they look at another tab) the timer stops.

I can do that kind of tracking without it being a breach of privacy because there's no way my script can know what is in the other tabs. It's just javascript, which they have allowed to run in their privacy settings, reporting how the browser is interacting with the page that my javascript is running from.

Google can't do that with Chrome, because they know what is in ALL of the tabs. It would be a major breach of privacy. Additionally, Chrome doesn't have the kind of user base that most assume.

You see studies on what browsers are used have an important word there – used. The studies look at thousands or millions of user-sessions and look at what percentage were from Chrome and say that they have 80 percent or more.

But that's not people, that is sessions. The actual truth is that at least 50% of all people who own a windows based computer don't use it that often, and have it still with the defaults of Microsoft Edge, and Bing as their search engine.

When you look beyond the first-world countries, there are millions of people who only have access to rather ancient PCs that still run long outdated operating systems with IE5 and IE6 on them.

People who *don't* use Chrome are kind of 'the silent majority', often the kind of ordinary non-geeky folks who only use the internet rarely, checking Facebook to see what their family and friends are up to once or twice a week. And because they don't do much, they didn't show up in the usage data.

Anyway, short version is that 'engagement metrics' actually suck for a search engine except, just like for anyone else, they are looking at how someone engages with their OWN pages – the SERPs.

Kathy » Ammon Johns

Thanks. Lots to think about here. I've always tried to think like Google while understanding their goal is to give people what they want. Zero clicks is definitely a result of that. As far as being able to judge whether the content satisfies, I think you're saying Google is not any smarter today than it was 20 years ago because it's smart enough to know that unless it's sitting in the room with the reader it really can't tell for sure what that person is getting out of it, not by the dwell time, not by the scroll depth, not by the bounce, just about not by anything. Ok then. Got any good link builders you'd recommend? 😁😉

🤭

📰👈

Steve

Many years ago when Yahoo! was running their own search engine they had a testing version with sliders that the user could move to adjust the SERPs in real time. Too many shopping results when you wanted informational results? Move the slider and the shopping would move down the page and the informational would move up. I don't recall what the other sliders did anymore, it's been too many years, but it was a very cool solution to a very real problem where guessing at search intent can be a real issue.

Cory

I think it has everything to do with rank in a really long equation. Google has abandoned there floc tracking for topic API. So the Therom is if I perform a topical search on a query, it will be weighed on relevance and interaction. Click Through Rate (CTR) is the begining of a very long authority signal that is very broken. So if my Google profile (super important here – cookies – trackers) has a history of performing search queries with topical relevance on click, the engine is going to learn that about my interest. In the same theory, do a brave browser incognito tor and you will see how much a blind pull differs from a "users" experience. I have full confidence that Google is using the account specific search data to sculpt user experience. It all points to a fast food mentality. How fast can we get it. They have personalized the biggest search engine in the world. They get more data on user behavior in a day then most companies get in a lifetime. Better believe it's being used to sculpt user behavior. Sorry for the novel. Lots of feelings on this.

Humphrey

Can you ‘Google bomb?' Google tests the behaviour of pages for specific queries to qualify it over time. You want to be the last click people go to so focus on that. Have a stupid high bounce rate and low clicks? This is a sign you need to clean up or curb your content. Trying to be everything to everyone isn't generally a winning strategy.

Some queries can be satisfied in 10 seconds or less and a bounce rate that's high isn't an issue. For this reason, bounce rate is probably not my big concern.

I do agree that bounce rate is an issue for long form content though.

Although, with the recent updates, Google can take people straight to a paragraph on your page. Maybe bounce rate is too nuanced to be a helpful metric?

Humphrey » Andrew

It really depends on the page as you mentioned. You want to be the last page people visit and you'll notice time on page with behaviour that will be glaringly obvious if they are getting what they need. I look at the month over month behaviours and watch for shifts.

John

The answer is yes. This was tested extensively by the team at Moz many years ago.

Link or it didn't happen 😄

Steve Toth 🎓 » Andrew

Rand was in a big room of people. Told them to search something specific and click the 8th result (or something that wasn't #1) then that page shot up temporarily.

John » Andrew

Pogo sticking.

https://www.YouTube.com/watch?v=_quS6fFPt5USolving the Pogo Stick Problem Whiteboard Friday Moz

John » Steve Toth

He performed the same test a number of times via Twitter also.

Ammon Johns 🎓 » Steve Toth

It was at a conference, plus live-tweeted from it, so it was a few thousand people at least. It shifted the Search Engine Result Page (SERP) in almost real-time at that scale, but it didn't change the ranking scores of the site, it changed what scores the algorithm for that SERP was looking for.

It's a built-in feature to handle news and burstiness in temporal web dynamics. There's several papers on it.

The effects of Rand's experiment started to drop off again within a day, and all back to normal within 72 hours, even though we know tweets still get interaction some days later. My feeling then is that even with a few clicks still doing the exact search he asked, and clicking the exact results he asked, without the volume of difference, the usual patterns changed the algo behaviours right back just as quickly as they'd changed before.

Andrew ✍️ 🎓 » Chris Thatcher

This is probably a helpful discussion. Any time you are looking at the search results and wondering why your page isn't ranking or why it fell in ranking, this is often to blame.

People try to look at links, on-page, schema, etc. but sometimes the answer is simple and clear as day.

Micha » John

And the Moz experiment has been debunked time and again.

📰👈