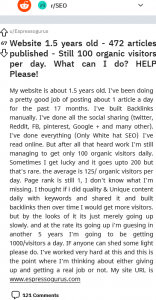

How do you handle link equity dilution for a site producing content?

If you're pushing out regular content it's hard to match link building with it to keep up and you end up with some kind of link equity dilution.

Not all articles attract links, and before you know it, 80% of your articles have no inbound links while only 20% attract links.

In a perfect world, it would be great if they all got links, but do you slow content production to match the link building resources?

4 👍🏽438 💬🗨

📰👈

You write a higher proportion of content that is designed to gain links as a marketing objective. Just as you can make content that is designed to sell products, or content that is designed to alleviate fears and concerns, a good proportion of your content should always be about getting noticed, and being mentioned to others (links).

That way the more content you create, the more links you get, automatically over time, which in turn makes your site more 'important' to search engines, more of a landmark and point of interest as they map out the link graph, and so they will give the site a higher crawl priority – more of your content gets crawled sooner and more often.

Any article you produce that doesn't get links, needs to be AMAZINGLY good at driving conversions instead, or it was largely a waste of time. If 80% of your articles are unremarkable (links are the remarks of the online world) and getting no links at all, then you need to revise the content strategy. Try a 50:50 mix for a while and see how that does for you. If the site is already large (thousands of pages) then you may need to go higher than a 50:50 ratio at least in the short term. Basically, a Press Release (PR) and link-attraction campaign through content marketing.

In particular, make a list of the sites you'd most love to get a link from. Then go through them, researching what they already link to and why. Research their taste, and make content that is tuned specifically to be super-attractive and irresistable to those tastes.

I like your reasoning but many topics are ranking without links. I.e. a competitor covers the topic and it shows for long tail keywords but the articles are not link worthy, nor does the long tail volume need something link worthy. What I do is check if Google is showing a unique article for the keyword or if it can be covered as a sub section in an existing article. If Google favours a unique article we put one out, but it's not going to attract links. There's just not enough meat to the unique sub topics. That's where I'm getting confused. It's a niche site with maybe around 100 pages and we're now pretty sure we've covered the topic better than other sites. Just worried that 75 of these pages aren't going to naturally get any links unless we focus on a guest post or other incentivised link building push.

Ammon Johns 🎓 » Alex

Nothing ranks without links (other than in Local Search which doesn't use PageRank). It's just that the backlinks of those pages are the navigation links, and they have enough power flowing into the site in various places (or even just the homepage) that the internal navigation links are powerful.

Not every page needs external links, but the external links have to flow into a site somewhere for the internal links to distribute to the pages you need to rank. That's why I said a 50:50 split to start off with. Half your effort on building the power, half on using it to rank performing, converting pages.

Alex ✍️ » Ammon Johns

That makes sense. Going to republish some of our existing pages and then focus on links! 🙂

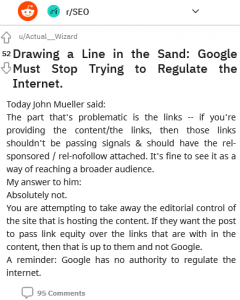

Out of interest do you know of what the Nov 21 core update was about? I'm struggling to find info on what it affected. Seems to be related to the knowledge graph. I saw this but it's all pretty vague

https://www.searchenginejournal.com/google-november-2021-core-update-over/428850/#closeGoogle November Core Update Is Over – What Happened?

Ammon Johns 🎓 » Alex

our only real clue, and it could be a red herring, is that 2 really notable things changed. The first is of course that crawl priority related stuff seems to have changed, although that might be more to do with the spam update, given that thresholds seem to have changed. The second, and the one I personally think is more likely is that we've seen a lot more updating and change happening in the Knowledge Graph – as mentioned in the article from Roger that you referred to.

Roger and I each independently felt that this felt like one of the infrastructural updates, where they change the hardware (or software) to allow for later changes, rather than something where we see immediate clear differences. The Hummingbird Update was a lot like that in some ways, where the update was live for some weeks without being announced, and no SEO users noticed it.

Alex ✍️ » Ammon Johns

That's interesting you say that, because on this one site in the portfolio I noticed a drop a few weeks before the update rolled out. We'd been improving as much as we could and then it kept dropping. What would you focus on if this update did hit it?

Ammon Johns 🎓 » Alex

Given that I don't know what the site is, the keywords involved, the market or demographics it is targetting, what main strategies have been employed, any guess would not just be wild, it would be feral. 😃

Alex ✍️ » Ammon Johns

I understand. Thought there might be a core theme to focus on related to the update! Will dig and report back.

Ammon Johns 🎓 » Alex

The fact that you saw the dip before the update rolled out means I'd feel pretty confident that the update wasn't the issue. The spam update rolled out first, so I'd probably be looking at whether sites I'd gotten backlinks from might have been devalued, thus suddenly passing less juice to mine.

Incidentally, this is exactly the kind of case that illustrates why I never, ever submit pages for crawling but rather wait for Google to find and crawl them in its own time. If my site had lost some of its importance, i.e. less of the links were counting, the time it takes Google to discover fresh content and index it would likely increase, giving me that signal.

<Edit to add>Note that links and site importance are not the only factors in crawl priority, and topic (whether it is trending, more or less popular, in or out of season) also matters a lot. </edit>

📰👈

Bent

You can focus on building links to your best content, rather than spreading links evenly across all articles. This will help to ensure that your best content receives the majority of the links, and from there spread it with internal links. Of course, you'll still want to focus on building links to your newer content so that it can start to attract links over time.

Gillispie

Target hubs and pillar posts and sprinkle in some acquired links to their supporting content.

Once the entire cluster is lifted (rising tide), they usually attract some level of organic links (if your content is worth a shit).

Getting the cluster to the shore is the hardest part.

Been doing it like this for years. Used to be semi-secret sauce back in the day.

Thanks. When you say sprinkle links, are we talking 2 or 3 or 10 to 20 per article?

Gillispie » Alex

I send most links to the pillar and/or hub and then sprinkle in some to supporting content. Which supporting content depends on how hard itself it is to rank. How many links depends on the effects of previous links. I don't send very many at once. I like shit looking nice and natural. When shit is bouncing around in the top 5 or 8, you've probably got enough.

I send maybe 1-5 links to pillar content and then a couple of links to different supporting content. Wait, and repeat.

My onpage does much of the heavy lifting.

Keep in mind I run informational content sites. If you have a different kind of site, you may need to adapt.

And this is only if my internal links aren't enough to already power things up (my two biggest sites are DR68 and 69).

A sprinkle there, a dash there…just to encourage shit to have a chance at eyeballs.

Superior content along with algo math wins the award at the end of the day.

One of my sites is fairly seasonal…so it gets most of its built links (and organic) when it makes sense…at the start and midway through the season.

Nice and natural.

Alex ✍️ » Gillispie

Thanks. That sounds great and I'll keep that in mind. I guess I just need to focus on getting more links!

How long are you finding it takes before the links kick in and you see a boost?

Gillispie » Alex

Links tend to power up over time so it's hard to say. They seem to gain trust or power, not sure which. They seem to hit their peak 6-8 months after they were placed. Probably an old Google tactic to stop churn n burn.

I'm sure there will be someone to argue with me on that point in a few minutes.

📰👈

Roger

I'm not going to share the details but, in my opinion, the hint I'll pass along is that it's better to not focus on individual updates but to step back and look at the overall picture.

There are some enterprise-level web devs whose business model is site audits and they were the ONLY ones on social media hyping the update as the most disruptive ever. It never occurred to them, and I didn't bother pointing out to them, that their point of view is highly biased and no way represents reality.

The impact was pretty much the same as any other day and as Ammon Johns noted, what you experienced does not seem to be from the update, which aligns with what most people saw from that update.

Jason Barnard noticed the extraordinary volatility in the knowledge graph, that's important, imo. We know that Core Algorithm Updates contain changes that affect the wide range of the algorithm, not just ranking. Given the low impact of that update, it makes sense that this was probably more infrastructure related and any changes you experienced were a part of the daily changes that happen, some of which COULD be ripples from the spam update.

Circling back to where I began, it's good to know the granular details of individual updates to avoid bad business decisions. But it's best to step back and look at the forest and where the entire Google index is headed.

"COULD be ripples from the spam update." -> that's the next thing I'm going to check out next. For this update do you think it could be links harming the site, or do you think it might be that previous links just got devalued and so it's now lacking link power.

Roger » Alex

Unless it's a manual update, links do not harm a site. They either work or they don't work.

I would focus on the content. The BIG problem I'm seeing today is that everyone thinks their content is fine. The assumption nowadays is that what's wrong is that ONE BIG THING that they are missing.

But it's rarely ever that ONE BIG THING that is missed, that when fixed, makes everything all right.

More and more I'm seeing that it's a whole lot of LITTLE things working together to bring the site down.

Alex ✍️ » Roger

Interesting take on the links. What's weird about this site is that we've:

– improved site speed

– improved the layouts

– massively improved the content (and I mean massively)

– added about 75 articles

– added schema

– improved internal linking

– improved E-A-T signals

Boosted many of the user metrics.

We've built a few links but not many. What's weird is that it dropped at mid Nov and still hasn't bounced back. So now digging into what could be next!

Roger » Alex

Probably the content. No offense, but most people think their content is great without really understanding what "great" means.

There are no E-A-T signals.

Alex ✍️ » Roger

Thanks. I'll double check the content.

Ammon Johns 🎓 » Alex

With regard to what *might* sound like a conflict between what Roger said about links, and what I said, we're actually both in agreement. There's a chance that some of the links that powered the pages (on other sites) which in turn link to you, stopped working. That would mean those pages had less 'juice' to pass through their links.

Backlink tools can't help with this, as they do the raw match on links, but cannot possibly know which ones may have been disavowed by a site, or which ones Google have de-powered and thus treat as if disavowed by all sites.

Without the tools, you kind of have to go with gut instinct, *or* you just play safe and earn a few extra good links and see if that brings it back to the prior levels (which tells you some of the old links were not counting as before).

Roger

Alex Not speaking about you, but the SEO industry in general, that much of it is so out of touch with what quality means that they needed to be told that a proper product review is one in which the product is actually handled and tested. That's really sad that they needed to be explicitly told.

To them great content WAS about keywords, word count, authors, EAT and all sorts of things that have zero impact on what makes a review a review.

A few years back a review company came to me for an audit after having wasted time with a popular SEO who had them add author information, disavow links and other 100% useless and inappropriate activities.

What was wrong was that they weren't reviewing actual products. Duh, right?

So they took my audit and built a new site adhering to the best practices I gave them and they're doing great with this new framework on what "quality content" really means. This was two years before Google came out with the product review update and the product review guidelines.

Roger » Ammon Johns

100% agreement.

📰👈

Micha

It doesn't really work that way.

Any page that is indexed is assigned some basic PageRank distribution. So while merely adding content alone doesn't guarantee you sufficient PageRank-like value to be competitive, the very fact of being indexed means the page HAS some PageRank.

Also, your internal linking passes PageRank around your site. So regardless of where it comes from, it circulates from page to page until it decays out of the signal.

So you don't have to earn links for every page and it's not helpful to think in terms of "I need [X] links per page."

Also, keep in mind that any given document only earns a miniscule amount of PageRank-like value. You can't capture much through typical link building strategies. There just isn't that much value flowing from those links to your site.

There are other intrinsic qualities of links (from the fact of their being included in the search engine's link graph) that help a document/site appear in and rank for queries.

The vast majority of documents never receive any links from other sites at all.

And most sites receive very few if any links at all.

It doesn't take that many links from other sites for a site to rise above the median level of "sites with inbound links" in any search engine index.

💟4

Sometimes that 'median level' gets you to about page 248 of the SERP though, where the top 1% with the pro SEO teams who know what they are doing are still filling the first 5 pages of a SERP. The market, and query, make so much difference here.

I tend to assume that in an SEO group, there's a higher than usual probability that it is a competitive term with money in it, and one that more than one SEO will be interested in. Too many years where someone comes into a forum or group saying they are new and just getting started, and have some small, unambitious project, then it turns out they are trying to rank for the word 'travel' or something. 😃

We agree on the basic premise – all you need is a bit more link power than the sites you want to be above. But in competitive spaces with a lot of professional SEO and link-building, sometimes accumulated over many years, that can be a lot more than "very few links". Context is everything.

Micha » Ammon Johns

Yes, you are correct. But then, this is why long-tail content plays work so well. People can shift the risk in their content portfolio away from highly competitive keywords and leverage both existing and new content for future optimization.

So the idea that one should slow or speed up content production is based on the false premise that the pace of content production has something to do with the competitiveness of a site.

It really boils down to where you invest your time and resources. Some people will make good choices on any given day and others will make bad choices on that same day. And even the best of us have a mix of good and bad days.

Ammon Johns 🎓 » Micha

So, you're saying we should all work nights, right? 😉

🤭

Alex ✍️ » Micha

So I understand correctly then if you have 100 pages or 1000 pages it won't make much difference if your inbound link equity is fixed. If so, why do people talk about content pruning?

Micha » Alex

"Why do people talk about content pruning?"

Because … reasons?

"…if you have 100 pages or 1000 pages it won't make much difference if your inbound link equity is fixed."

I wouldn't say THAT, either.

Does inbound PageRank get diluted as a site outgrows its link profile's growth? Sure.

But that's not an SEO problem.

If the site isn't losing traffic, then one just needs to focus on maintaining or improving the quality of the content and the user experience.

Either the site earns links or it doesn't. But if it's earning search and non-search referral traffic, then it's doing okay. If it's growing its traffic, that's even better. If it's growing its conversions, that's even more better.

📰👈