u/MarceloDesignX

10 Smart Google SEO Tips

Hi there, This article is based on the work day that I have been doing on my clients' websites, so I have decided to make a small summary of tips that can significantly boost the ranking of your website.

1. Ensure Your Content Is Created Around a Primary Keyword and Relevant Secondary Keywords

Researching keywords for your content not only helps to develop the framework of your piece; it also enables you to understand what your audience wants to read. Understanding what keywords are best for your target audience and content type can help you build a content strategy to boost Search Engine Optimization (SEO).

Google-friendly writing is dependent on demonstrating a balance between keywords and everyday language. This means that your content should be written in such a way that it flows naturally. Keywords should naturally come up in your content so that you don't have to stuff your content at the last minute. One way to do this is by identifying semantically related keywords to your primary keyword target.

2. Diversify Your Backlink Portfolio

Even if you follow all of the tips related to on-page technical SEO, it still won't guarantee to make the front page of Google. A big part of SEO deals with backlinks and whether you're generating backlinks from high-authority sites.

Backlink diversity come can come from two sources, specifically:

The type of backlink: Generally, your backlink will be a dofollow or nofollow, with a dofollow carrying more weight.

A site where the backlink originates: If you're promoting your content, for instance, and targeting publishers to run a story, the site that links back to your content would be the source of your backlink.

A diverse backlink portfolio signals to Google that your site is an authoritative source and that you're generating links in a natural way versus relying on outdated black hat or other spam tactics.

Need help finding the right backlinks? The Backlinks Analytics Tool can identify your referring domains and total backlinks. We offer the ability to compare up to four competing URLs to get a sense of where there might be an opportunity.

3. Use Effective Header Tags to Target Google Featured Snippets

When developing content, you want to be mindful of how you structure your content on the page. Every page should have content organized logically, with the most important information at the top of the page. In fact, studies have found that 80% of readers spend most of their time looking at the content at the top of the page.

However, Google doesn't necessarily index context solely on what's at the top of the page. They look at the article as a whole to see whether it's comprehensive, so with that in mind, you'll need to put some thought into how your page is laid out.

To get the most out of the keywords you're targeting, consider adding jump links to the top of your page. This not only makes for a more enjoyable user experience, but it also allows you to use your header tags in more creative ways to go after Google

4. Use Clean URLs and Meta Descriptions

Your URL and meta description are key factors in helping Google understand what your content is about. Although there is no hard and fast rule about the length of either of these to improve your rankings, the goal should be to make them both as concise and clear as possible while including your target keywords.

Below are some best practices for keeping your URL and meta description as clean as possible:

Use a consistent structure that keeps future posts in mind: Whether you're organizing specific content to one area of your site or creating similar content down the road, you'll want to keep your URL and meta description structure somewhat similar for related content.

Avoid superfluous language: For both, avoid fluffy language. Each should be short and to the point.

Make them keyword-rich: Again, be sure that your URL and meta description include the keyword or keywords you're targeting.

5. Decrease Load Times on Top-Performing Pages

Slow loading times are one of the primary causes of high bounce rates. According to Google, "speed equals revenue," which essentially means that slow loading times increase the odds that your site visitors are going to leave your website page. For this reason, your SEO ranking is dependent on the speed of your website.

An auditing tool can be a valuable resource to gain an understanding of your website's speed. With a page speed auditing tool, you can learn about what might be slowing your website down and how you can better address that. It can also scan for common SEO mistakes so that you can improve your website content for an SEO boost.

6. Numbers in titles

Along with meta descriptions, titles. Just shared a study recently showing that dates added to titles increased rankings for a particular brand. Numbers are generally one thing that I always test in title tags that usually produce pretty consistent results. Specifically, dates in title tags are often a winner, January 2021.

Don't be spammy about it. Don't include it if it doesn't make sense and don't fake it. But if you can include a number, it will often increase your click-through rate for any given query.

7. Increase internal linking

Number eight: increasing internal linking. Now a lot of top SEO agencies, when they need to quickly increase rankings for clients, there are generally two things that they know are the easiest levers to pull. First, title tags and meta descriptions, what's getting more clicks, but second is increasing the internal linking.

You know that you can increase internal links on your site, and there are probably some opportunities there that you just haven't explored. So let's talk about a couple easy ways to do that without having too much work.

8. Update old content with new links

Number nine is updating your old content with new links. This is a step that we see people skip time and time again. When you publish a new blog post, publish a new piece of content, make sure you're going back and updating your old content with those new links.

So you're looking at the top keyword that you want to rank for, and going in Google Search Console or checking tools like Keyword Explorer to see what other pages on your site rank for that keyword, and then adding links to the new content to those pages. I find when I do this, time and time again, it lowers the bounce rate. So you're not only updating your old page with fresh content and fresh links and adding relevance. You're adding links to your new content. So make sure, when you publish new content, you're updating your old content with those new links.

9. Invest in long-form content

Number 12: need you to invest in long-form content. Now I am not saying that content length is a ranking factor. It is not. Short-form content can rank perfectly well. The reason I want you to invest in long-form content is because consistently, time and time again, when we study this, long-form content earns more links and shares.

It also generally tends to rank higher in Google search results. Nothing against short-form content. Love short-form content. But long-form content generally gives you more bang for your buck in terms of SEO ranking potential.

10. Use more headers

Break up your content with good, keyword-rich header tags. Why? Well, we have research from A.J. Ghergich that shows that the more header tags you have, generally you rank for more featured snippets. Sites with 12-13, which seems like a lot of header tags, rank for the most featured snippets of anything that they looked at in their most recent study.

So make sure you're breaking up your content with header tags. It adds a little contextual relevance. It's a great way to add some ranking potential to your content.

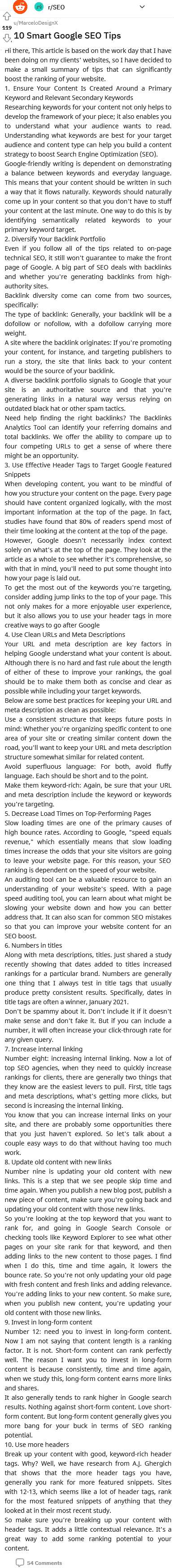

54 💬🗨

📰👈

"When you're doing that long-form content, make sure you do number 13"

Oh, you just copied this article from somewhere then?

Currently this is an excerpt from an article on my website, initially I had created a post with a link pointing to the article but because it is not possible I decided to share the content but shortening the tips so as not to overwhelm people who only want to read something brief .

When using blog excerpts on Reddit, you might want to proof them to make sure the excerpt makes sense without additional context. Referencing a 13th tip makes no sense when your list ends at 10.

MarceloDesignX ✍️

Obviously it doesn't make sense, was it just a mistake that I missed? I will be crucified for just wanting to contribute content and make a mistake.

HumbleBrothers

What's your strategy to earn backlinks? I've never been able to get solid links.

Since all my clients are companies, I do local SEO by registering them in directories, and I also try to get links on websites with high authority, such as quora, medium and other similar platforms.

Calista110

Great advice, thanks! About 6. Numbers in titles, would you recommend updating the release date of old articles?

Yes, I recommend it 100%. Currently if you can update old content that are already positioned in Google in addition to the title you could update the post with new links too.

Propel-Guru

Here I have listed 10 smart Google SEO tips, implement these in your website and I am sure you will get positive results.

• Voice Search Optimization

• Mobile Optimization

• Google's Expertise, Authoritativeness, Trustworthiness (EAT) Principle

• Featured Snippet

• Image Optimization

• Semantically Related Keywords

• Building Quality Links

• Local Search Listings

• Improved User Experience

• Schema Markup

These are the must implemented steps which should be updated in your website for good results.

📰👈

11 Lessons I Learned From Building 100 Whitehat Backlinks In 4 Weeks

Genuine Backlinks from Trusted Sources Are One of the Best of Those Metrics (Signals)

How Many Backlinks to See SEO It Depends on How High the Competition of Your Keywords Is

The Best Way of Backlink Research Is Using a Tool to Peeping Your Competitors’ Backlinks

A Secret to get High DA Backlinks You Should Bookmark!

Low Competition Keywords Still Need Any Backlinks

To Earn Backlinks Without Paying Incentive

The Summary of Discussion 1: The Anchor Text Ratio of Backlinks

Chris

What should be the Anchor text ratio?

If I need to build 200 links, so what will be the ratio?

5 👍🏽1 🤭6

20 💬🗨

📰👈

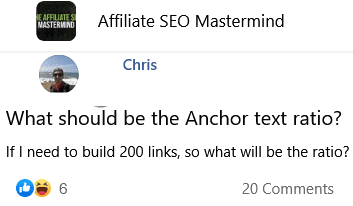

1% exact, 90% brand, full url,

10% generic

🤭👍🏽7

101%? 👀

Peter

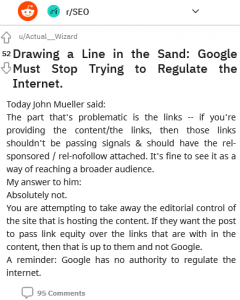

There isn't a magic ratio number (despite what people will claim). Some sites naturally have much higher anchor/domain name matching (locational Exact Match Domain (EMD)'s for example) – even then, still there is no magic ratio number

Roger

Google is judging links on a one by one basis. If 100% of the backlinks are judged to be normal then 100% of the anchor text would be used.

Studies that show a percentage of anchor text to high ranking sites are misleading. The study authors do not know which of those anchor texts are being used by Google, so any claims made about anchor text ratios are misleading. It's long past time the Search Engine Optimization (SEO) industry stopped paying attention to Search Engine Result Page (SERP) correlation studies because they are misleading.

👍🏽13

I'd are to this that for ever manual action we remove, by far the biggest give away for unnatural linking at scale is over use of keyword anchors. It's super easy to spot the patterns with just the human eye when you know what to look for… So one can only imagine how good G is with near unlimited processing power

Roger » Callum

True. 200%.

I think link patterns give away the link schemes. I've used LRT (link research tools) to map out low quality link networks. You enter two domains and the site you want a link from and it tells you all the links the domains have in common. Easily maps out low quality link networks.

Eye opening because if I could find these low quality sites with LRT then it should be so much easier for Google.

Callum » Roger

Yup!

Clients are always shocked when we give them the big long list and say "sooooo you paid for these right?" 😂

The interesting thing is that we've never seen a traffic drop after submitting a disavow with often tons of "high value" links in them – so, for the most part I don't reckon those links were doing anything, and the poor client had paid a lot cash monies for them 🙁

Alex » Roger

What do you mean on a one by one basis?

Roger » Alex

Every link is judged individually based on a variety of factors, some known and some not known and some that are suspected.

Some are counted, some partially counted and others not counted at all.

Google has most recently in the past year introduced Artificial Intelligence (AI) into judging websites to exclude or include, so now that is part of the mix as well, with the effect that some sites won't even need to have their outlinks or inlinks counted as they are excluded at the point of indexing through the use of Artificial Intelligence (AI) technology that is constantly fed with new examples of spam so that it becomes better over time, constantly improving.

Ranking

SEARCHENGINEJOURNAL.COMRanking

Alex » Roger

Oh wow. That's huge.

1) Is this conjecture or have you tested some of these things?

2) If this is true, how come Private Blog Networks (PBN)s have so much power to move stuff up or down? These can't be closely related to the seed set, and even if they're out of topic, they're able to push power. Again, same with satellite sites like Google sites. They're new but somehow are able to pass juice & rankings. They're neither linked to the seed set, nor would they be in the reduced link graph.

3) Regarding anchors, assuming a site is within the reduced link graph, surely the anchor text plays a role?

4) Is there a way to test or see if a site is within the reduced link graph? Any ideas here?

Roger » Alex

1. Google doesn't talk about what they use. Even Penguin is not really understood, but I think it's the distance ranking based on the time that algo was published. But Google will NEVER confirm what's in it, so we have to read what the latest algos are and then make an EDUCATED guess.

But one can only make an educated guess by having an education, i.e. reading what the latest research is.

People who don't know what the latest research is tend to just be using unreliable thinking, like "common sense" which has nothing tangible to connect it to algos that have actually been published by Google or Microsoft or even some universities like UMass and Stanford.

The latest on spam is that Artificial Intelligence (AI) thing that Google recently published.

I've been building links for 20 years.

It's hard to test anything and receive consistent results because there are so many variables in Google's algo that cannot all be accounted for and then say with a reasonable certainty that X anchors did the trick. You can never know which links had power, which had half power, and which had zero power.

So, over the course of the years, I know people and what they do and I know what I do and can tell when what they do stopped working.

Like, way back I did a thing where I wrote articles that would be syndicated to a certain group, resulting in thousands of links. That powered one of my sites for years over bigger and more authoritative sites, LOL. But it stopped working around the time of Penguin. I didn't get a penalty. Some links just stopped working and my assumption is that those links lost their power and I'm pretty sure I know why, has to do with their location on the page, link graph related relevance and possibly similar content getting canonicalized to one link.

There was a time when paid guest posts with anchors in the articles did the trick. But at a certain point in time they stopped working. I know this because all the people who were hammering that all stopped doing it at the same time.

I remember many years later seeing a guest article in an SEO site where they were telling how to do the guest article thing, bla bla bla and they had their URL as an example. I checked and they weren't ranking for their keywords. And I thought, yeah, yup… indeed.

I'm not saying guest posts don't work. They can!

Just pointing out that certain posts with a certain pattern stopped working.

I use my best judgment with all the knowledge and experience that I have.

2. "how come Private Blog Networks (PBN)s…"

In Local Search rankings? The ranking algorithm for local search is generally easier to game.

Is it because of lack of competition? Maybe.

Is it because the algo is different for local? Probably.

3. >>>surely the anchor text plays a role?

Not necessarily. It can depend on the location of the anchor text. Google depreciates anchor text if it's in certain locations or surrounded by certain words.

4. >>>Is there a way to test… reduced link graph?

Not really. I've used Link Research Tools to discover whether a link opportunity is part of a link selling network. I don't use it anymore but it used to have a tool that you can enter two domains and see all the backlinks they have in common and from there keep mining and unravel PBNs and link inventory.

For my workflow, in my opinion, I consider the links from those sites poisoned and excluded from the reduced link graph.

So if I could do that with LRT I'm pretty sure Google can unravel link relationships pretty easily. That's what the link graph is all about.

Alex » Roger

I love reading posts like this. It really gets me thinking! I've spent so much time working on sites and the user experience the semantic relationship I haven't had enough time to look at the link building side for a while.

1) It does seem Google is ignoring certain links.

2) The idea of a reduced link graph makes a ton of sense.

I always assume Google is way smarter and has way more data than we can imagine. It's interesting to learn about some of the building blocks like what you're mentioning.

Here's another quick question, can nofollow links pass link juice? I.e. imagine you have tons of amazing 'seed' links pointing to your social profiles. Would any of this pass through to your site even if they're all nofollowed?

Roger » Alex

Social profiles are ignored, as they should be. Even without a nofollow they would be ignored. The only people who insist otherwise are those with skin in that game.

Google's Mueller Calls Web 2.0 Style Links Spammy

SEARCHENGINEJOURNAL.COMGoogle's Mueller Calls Web 2.0 Style Links Spammy

Alex » Roger

I've just got lost in all your link building articles! Wow. Awesome stuff. For those web2s surely if they have some powerful links at them they'd pass power?

Roger » Alex

Some sites can be ranked regardless of anchor text. For example, this algorithm I wrote about two years ago doesn't use links (linked below).

It lets the regular ranking algorithm do what it does, then this algorithm comes in and RE-RANKS the pages by using just the content, with links playing zero part in the ranked web pages.

And this isn't the only algorithm published or patented by Google that talks about re-ranking web pages, either.

Some people who don't read the research papers say there's no use in reading them. But that's not true.

Knowing what has been researched and what MAY be in use helps you be smart enough to identify when someone's idea about anchor text or Search Engine Result Page (SERP) analysis is misleading and unlikely.

Like when people were saying that clicks and FB likes were a ranking factor, people like me who read the research knew it was incorrect.

The problem with SERP analysis studies and anchor text ratio studies is that they are very compelling, a word that means irresistible, especially when they tell you that there are millions of queries studied. But adding more numbers to a study that is flawed does not improve the accuracy, which means they are not factual, truthful.

Factual is always preferred.

This algo below that I wrote about is the kind of thing that helps explain why SERP analysis for anchor text ratio is not useful information. This has been the case for years and years and years wrt anchor text ratios.

What is Google's Neural Matching?

SEARCHENGINEJOURNAL.COMWhat is Google's Neural Matching?

Alex » Roger

This explains why with all the onpage tweaks we do, I'm getting some great results. I mean it's been a bit baffling the jumps we're seeing after tweaking pages. We still need to get some links but this confirms what I suspected about a bigger shift onto page factors & understanding the 'topic quality' of an article rather than it's popularity on social media (likes, etc) or link popularity.

Almost like they want to grade articles based on the text rather than all the noisy signals we keep trying to game!

📰👈

Find Opportunities for Backlinks and Partnership With Other People and Businesses in the Niche

How Can I get Free Backlinks but Contextual Like Guest Posting?

What Conditions to Install Backlinks to Home Page Instead Of an Inner Page?

I Let my Staff Create Backlinks for me

Create Backlinks with Your Market Anchor Text and the Content Quality!

EAT, UX, Backlinks, Niche are Stronger than Other SEO Ranking Factors

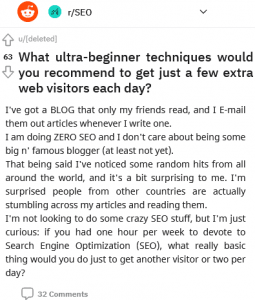

19 Ultra Beginner Tips to Grow Website Traffic