Discussion 2: Solution for Google Still Not Indexing Your Pages or Content Still Not Indexed

u/MagPro

Fed up with Google not indexing/ very slow indexing my pages

It's been more than 2 months and more than 80% of my pages are still not indexed. Even though the content is unique, I don't understand why it is taking so much time for Google to index my pages.

The removal requests have been complied within a day but the indexing part is still too slow. It's infuriating. Any solutions?

61 💬🗨

📰👈

It is a good explanation (.

It's too early to be absolutely certain, but it is my strong suspicion that the changes we have seen in indexing, where many sites at the lower end of the 'global importance' spectrum are not getting content indexed as quickly as previously, or in some cases, having difficulty getting it indexed at all, is not entirely a temporary situation.

You see, it comes after some years of mumblings and rumblings within Google about their issues with quantity over quality spam. And coincidentally, follows right on the heels of vague announcements about new methods of dealing with spam in a spam update.

The problem for Google was sites like Quora, and a hundred other similar sites, where masses of user-generated content could produce literally hundreds of thousands of new pages over the course of just a few hours. Yet the vast majority of the new URLs this created were most often just rehashes of the same discussions on a thousand already indexed threads and URLs from the same site.

Then there is the increasing availability of so-called AI copy writing software. Software that can create huge volumes of very low quality content at almost no cost in resources. Now, Machine-generated Content spam is, despite the bullshit by the so-called AI content software, nothing new. There was software that did much the same thing years and years ago that scraped and spun existing content into new forms according to a set of rules and instructions, and all the new software is doing is calling those rules and instructions "Artificial Intelligence (AI)".

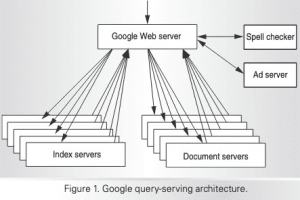

Google have systems to deal with this.

I just believe that they are improving and extending them. Crawl Prioritization has always existed in Google, and is largely a scaleable, self-regulating thing. Every known URL that Google wants to crawl, either to index for the first time, or to revisit to check for updates, is assigned a place in the long queue according to a priority scoring system. Ultra-high priority pages and revisits get taken care of almost at once, while at the other end, ultra-low priority URLs may wait months or even years, and possibly never get a turn at all, as higher priority items get added to the queue ahead of them.

It has always been quite typical that an 'average' website with 'average' importance and link popularity values for its size would have about 90% of its content indexed, and about 10% not indexed, at any given point in time. Sites such as Amazon and Ebay might have a far, far higher percentage of unindexed product pages simply because they change so quickly, and are often at such deep levels of the site in terms of links to follow.

However, over the past years, I have seen a steady increase in the number of regular, average-ish business sites that have been advised to blog and create fresh content every few days, have a MAJORITY of their content not be freshly indexed and reindexed.

Basically, what PageRank was going into and around the site from the few pages that earned any genuine links was being spread so thin by flowing to all the thousands of essentially pointless blog posts nobody cared about, that the strength in any one page was diluted to the point where it just wasn't seen as important.

My suspicion then is that Google have tightened this up, just a little, to help them find truly worthwhile pages even in a swamp of dross, and at the same time, make it much clearer to quantity spammers that their tactic is self-destructive.

So, all of this means that the following is my advice on how to focus with content going forward:

First, do not publish new stuff just to publish new stuff. If a new page is not built to convert for a specific campaign (e.g. a new product line), or is not a well-thought out piece of content you are sure will attract a bunch of genuine new citations, rethink it.

You need to focus on more results from less pages going forward.

It is better to spend 2-3 months creating one, absolute killer piece of content, such as a major study, a really good survey with expert insights, or otherwise something truly special and remarkable that will gain you a bunch of high-value links and buzz, and have people searching *specifically* for that study/page, than to churn out minor posts a few times a week that gain minor links.

You need to focus on more results from less pages going forward.

Crawl Prioritization was always going to become more and more of an issue over time. That's how power laws work. The rich get richer.

Crawl Priority is a complex system, and some of it is driven by circumstances such as news events, big trends, and circumstances beyond your control. But a lot of it is stuff you can have some control over, or at least, intelligently leverage and influence.

'Importance' of a site is one of the signals for priority, and the factors that show importance are the strength and power (not volume) of links and citations. When a major or local news site mentions you, that is a sign of importance. When your granny mentions what a good boy you are, that isn't. If you go through some incredible shenanigans to get adopted so that you have a thousand 'Grannies', and they all cite what a good boy you are, that STILL isn't important. Focus on quality links, links that can't be bought, or faked, or gained by the worst of your rivals.

If you have pages that get a lot of search impressions, but almost no clicks, consider revising, updating, or else pruning them. If Google see that the pages it already has for your site just rarely perform, they don't tend to adjust the ranking of the site, but they may lower the priority of grabbing any more.

Raise your profile. Be part of communities and conversations. Not to have your contributions there filled with links, but so that you get mentioned more, that you are discussed because of who you collaborated with, assisted, or generally were there for. This shows a level of importance wider than just what you are saying about yourself.

If you are regularly adding content to your site, for promotions, products, or simply news and updates, make absolutely certain that you are gaining links and citations, signals of importance, even faster than you are adding content that dilutes what you have. Focus on earning great citations.

Another part of crawl priority is in supply and demand. Think very, very hard before writing content that effectively already exists out there from a thousand other sources. Focus on content that is hot topically, that is 'on trend', and especially where you have a unique perspective (or can hire one), that separates your content from the masses.

(c) Ammon Johns (Facebook user)

Very well thought out and informative response. It all comes down to … Do the work to be better. This is also why you need visitors from multiple channels who will interact with your site and stay on it. Search Engine Optimization (SEO) has always been a long game… It's just getting longer and longer.

IllusWheel

I think we're well beyond this being a "bug" and rather more of a new normal. The indexing bugs reported months ago were probably tests supporting this change.

Crawl and indexing now seems to be even more aligned with consumer demand for that content on your site. So even though it may be unique, there are no positive metrics that support priority "discovery" crawling.

This doesn't mean it won't perform well in search, but webmasters may need to be more patient and thoughtful about quality as well as audience building.

📰👈

Discovered-Currently not Indexed or Crawled What Should We Do?

Discuss Discovered Currently not Indexed by Google SE

Does Google Usually Crawl Text Content of an URL first and delay Indexing it?

Discussion 1: Solution for Discovered Currently Not Indexed in GSC

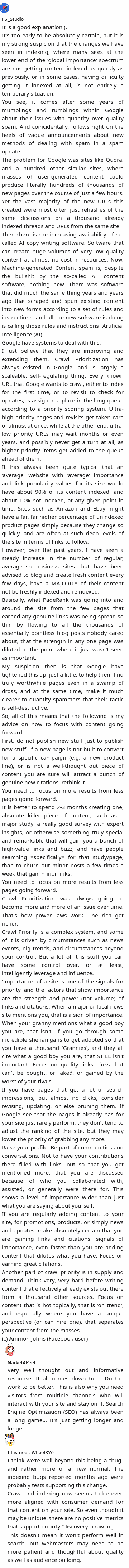

Hossain

Please help me get rid of the "Discovered – Currently Not Indexed" problem. Please, for god sake!

I'm facing this problem for weeks. I didn't have this problem previously. I started facing this problem after posting about 7-8 articles. I have already tried the following things perfectly:

– Making Posts plagiarism free (Using Copyscape, Grammarly, and other tools)

– Using the RankMath Instant Indexing plugin and tried the 'Microsoft IndexNow plugin (Crawlers do crawl the posts but they don't index articles)

– All of my contents are at least 1,500 words and high in quality.

– Used Ping tools

– Good Internal Linking

But WTH does Google still think my articles are not worth getting indexed?

14 👍🏽14

47 💬🗨

📰👈

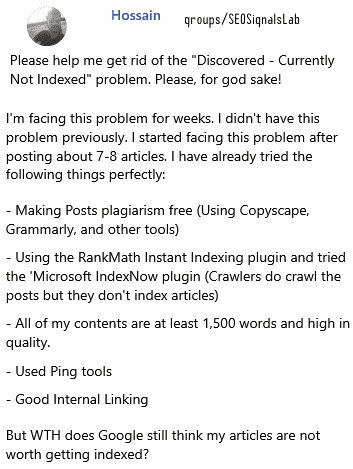

You need backlinks to those pages. Period.

🤭💟👍🏽5

Suresh

Plagiarism is not a problem. Whatever you have done is perfect. Please generate backlinks to all the pages/ posts and try sending those links through an indexer (3rd party) it will surely help.

🤭💟👍🏽3

Smith

Interlinked them more. Or backlinks.

💟

Dejan Minčić

Try this:

https://indexmenow.com/en/Didn't use it myself, but a couple of my friends suggested it as a good solution getting pages to indexed faster.

IndexMeNow: Google indexing, 80% indexed in 24h, indexed or re-credited

INDEXMENOW.COMIndexMeNow: Google indexing, 80% indexed in 24h, indexed or re-credited

Mišo

Do you have the "latest posts" section on your homepage? A good Idea is to link from there for a while. Also generally good interlinking is important. You can also try Twitter mentions. Or even posting those articles linked from Google My Business posts.

💟👍🏽4

No, I Don't have the "latest posts" section specifically. But the latest posts does show up on the home page.

Mišo » Tawhid

If they show up, then you have it, one way or another. 🙂

Hossain ✍️ » Mišo

Hi, I'm pretty much being able to fix the "discovered – currently not indexed" issues using the instant indexing tool by Rank Math.

The posts does show up with "URL is on Google" when inspected. But they are not disappearing from the "Discovered – Currently Not Indexed" tab. Is it a problem? Please help me.

Quinton

How is your site speed?

💟👍🏽2

Looks good. Page score is above 70 and desktop score is 90+..loads within upto 3 secs.

Marina

I have a "Recent articles" in a drop down window in the main menu with three latest articles. If I notice one hasn't been indexed, I put it in there (again) and keep it until indexed. I also make as many internal links as possible, but I tried to do this alone and it didn't help.

Alright, I'm pretty much being able to fix the "discovered – currently not indexed" issues using the instant indexing tool by Rank Math.

The posts does show up with "URL is on Google" when inspected. But they are not disappearing from the "Discovered – Currently Not Indexed" tab. Is it a problem? Please help me.

Marina » Hossain

If I understood you right, one tool claims that the page is indexed and the other one doesn't? In that case I would check if the page shows up on Google manually by typing site:yourpageurl.com in the search and seeing if it shows up

YOURPAGEURL.COMyourpageurl.com

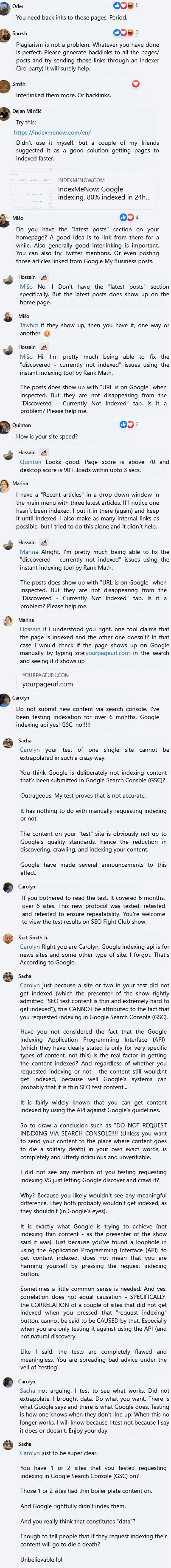

Carolyn

Do not submit new content via search console. I've been testing indexation for over 6 months. Google indexing api yes! GSC, no!!!!!

Your test of one single site cannot be extrapolated in such a crazy way.

You think Google is deliberately not indexing content that's been submitted in Google Search Console (GSC)

Outrageous. My test proves that is not accurate.

It has nothing to do with manually requesting indexing or not.

The content on your "test" site is obviously not up to Google's quality standards, hence the reduction in discovering, crawling, and indexing your content.

Google have made several announcements to this effect.

Carolyn

If you bothered to read the test. It covered 6 months, over 6 sites. This new protocol was tested, retested and retested to ensure repeatability. You're welcome to view the test results on SEO Fight Club show.

Kurt Smith Jr » Carolyn

Right you are Carolyn. Google indexing api is for news sites and some other type of site. I forgot. That's According to Google.

Sacha » Carolyn

Just because a site or two in your test did not get indexed (which the presenter of the show rightly admitted "SEO test content is thin and extremely hard to get indexed"), this CANNOT be attributed to the fact that you requested indexing in Google Search Console (GSC)

Have you not considered the fact that the Google indexing Application Programming Interface (API) (which they have clearly stated is only for very specific types of content, not this) is the real factor in getting the content indexed? And regardless of whether you requested indexing or not – the content still would;nt get indexed, because well Google's systems can probably that it is thin SEO test content…

It is fairly widely known that you can get content indexed by using the API against Google's guidelines.

So to draw a conclusion such as "DO NOT REQUEST INDEXING VIA SEARCH CONSOLE!!!! (Unless you want to send your content to the place where content goes to die a solitary death) in your own exact words, is completely and utterly ridiculous and unverifiable.

I did not see any mention of you testing requesting indexing VS just letting Google discover and crawl it?

Why? Because you likely wouldn't see any meaningful difference. They both probably wouldn't get indexed, as they shouldn't (in Google's eyes).

It is exactly what Google is trying to achieve (not indexing thin content – as the presenter of the show said it was). Just because you've found a loophole in using the Application Programming Interface (API) to get content indexed, does not mean that you are harming yourself by pressing the request indexing button.

Sometimes a little common sense is needed. And yes, correlation does not equal causation – SPECIFICALLY, the CORRELATION of a couple of sites that did not get indexed when you pressed that "request indexing" button, cannot be said to be CAUSED by that. Especially when you are only testing it against using the API (and not natural discovery.

Like I said, the tests are completely flawed and meaningless. You are spreading bad advice under the veil of 'testing'.

Carolyn » Sacha

Not arguing. I test to see what works. Did not extrapolate, I brought data. Do what you want. There is what Google says and there is what Google does. Testing is how one knows when they don't line up. When this no longer works, I will know because I test not because I say it does or doesn't. Enjoy your day.

Sacha » Carolyn

Just to be super clear:

You have 1 or 2 sites that you tested requesting indexing in Google Search Console (GSC) on?

Those 1 or 2 sites had thin boiler plate content on.

And Google rightfully didn't index them.

And you really think that constitutes "data"?

Enough to tell people that if they request indexing their content will go to die a death?

Unbelievable lol

Teague » Carolyn

Your preso on Fight Club was really insightful, thanks for sharing 🙂 From personal experience, indexing is becoming a big issue, that's for sure.

Carolyn » Teague

Thanks so much for letting me know. I've had lots of people confirm that based on the testing results they have set up same process and it's working great for them as well. One colleague confirmed over 10K pages they ended up getting indexed using same system.

Walker » Carolyn

I submitted my latest 10 articles on GSC with no interlinking to test. Half got indexed immediately, and the ones that got indexed were lower competition keywords.

Carolyn » Walker

Other people have told me they got this same level but most people are not as lucky. 50% indexation is good. I've found that when Google isn't updating, the indexation for this method I've been testing is 100%.

Peterson

I've seen oddities when trying to request indexing via GSC for just about a year on a mix of new and established sites. What used to index within an hour sometimes gets stuck for months.

The SLUG rename trick worked on one post for an established site, and it started getting traffic from Google the same day. No meaningful changes between the initial GSC submission and the GSC resubmission. I also knew the keyword was essentially "no competition" because all of the ranking content was mismatched.

For a new site I started in mid-January, it was crickets with submitting via Google Search Console (GSC) with 5 posts that were 2000-2500 words at the time – so comprehensive on low-competition keyword focused.

On two other test sites, their blank homepages self indexed within a few days. That first site finally indexed just the homepage after 6 weeks. I also finally gave in and set up the Google API for that site. Posts are indexing the same day, and a few already have keyword impressions and click-throughs. Nothing consistent yet from Google. Bing traffic is starting a drip of consistency. I figure I'll see where things are by the end of April, which makes it about 45 days from starting API indexing requests.

Submitting via GSC seems to be a black hole that sometimes has success, but not like it used to a year ago. It also seems to be more problematic with new sites, but I don't have a variety of projects running for that.

📰👈

Bogdan

Read about crawl budget. You have to improve your website – make a better structure, avoid having pages with high click-depth, get rid of the unnecesary pages – but not by noindex or nofollow, implement a better internal linking system, improve the page load speed, add quality text to thin content pages and so on. When your site will be better, and most of the pages you give to G will be indexing-worthy, you'll get a better crawl budget, and more pages will be downloaded at each googlebot pass

Can I please inbox you?

Bogdan » Hossain

During business hours, Romanian time, sure

Lisa

How many pages have you on your website? I had this problem for months on a new website with less than 20 pages, when I started uploading 1 or 2 new pages per week, Google just started indexing all of the content.

I currently have 22 posts

Lisa » Hossain

It could be just that, as you don't have a lot of content, Google doesn't have reasons to go on your website for the moment

Cippy

I manage to solve the issue with 2 things:

1. Make sure you don't over optimized your content for the primary keyword and change your current H2,H3, etc copy

2. Changing meta title and H1 copy

3. Resubmit the URL

Faizan

Use IndexNow API feature by Rank Math (free) and make internal linking STRONG enough.

This is what helped me.

Published some Articles on an Expired Domain, but they’re not Indexed yet

Is Crawled Currently Not Indexed Will Never or Hard To Show because It Only been Archive?

Does Google Crawl and Index Users Generate Content Sites like Quora and Medium Easier than Yours?