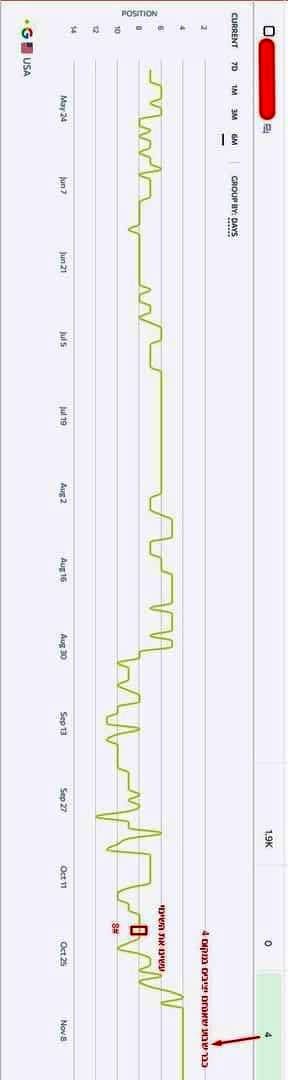

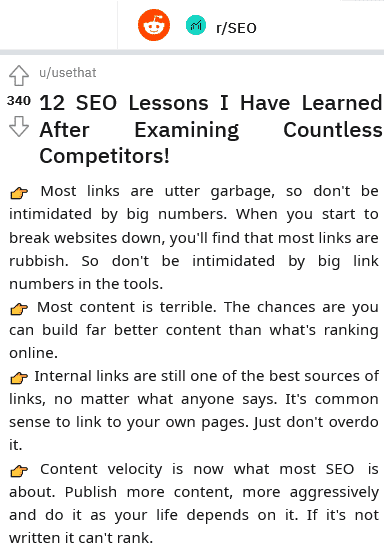

# ** Deleting 1,938 words from a page will help the page get better rankings? **

** 6052 words vs. 4114 words **

For a long time, one of our sites that was ranked quite good on a competitive keywords in the US started to drop rankings on important keywords.

We have been trying for a while to figure out what could be the problem, is it video we added ATF? or maybe affiliate links we added to the top and less relevant to the user in their location?

(Of course the title / description was also changed several times and it didn't really help)

** All this time, bad quality sites kept overtaking us – which made us realize that there was something beyond here. **

** As a last card – we decided to go for refining and accuracy of the content. ** Instead of long paragraphs detailing OVER on the subject, we made the paragraphs shorter and clearer – exactly what the user is looking for.

On October 21, we made the content change, returned to our place and we are expecting a little more respect from Google to enter the top3 🙂

** Conclusions: **

* We would not think for a moment that this is the content if we did not make all the changes before, we thought that our content is very in-depth on the subject and therefore there is nothing to change in it.

* You can try changing the title / desc but after twice it does not work you can go to the extreme and try other things.

* Documentation of processes can produce material and working methods for further.

50 👍🏽5338 💬🗨

📰👈

📰👈

Google don't count words or keywords in that sort of way. But they do count page speed, and the amount of code and text on a page increases its file size, which in turn increases the time it takes to load. So when you cut half the text away and see a page rise in rankings, it means that the quality that text was adding, and the relevancy to that particular search, was of a smaller value than the tiny factor of decreasing the file size and speed by a fractional few Kb of data.

Isn't also relevancy about a topic calculated as a relative figure. So decreasing the total amount of text while keeping the valuable parts can actually increase relevancy?

Ammon 🎓 » Robin

Not since Passage Ranking came along for absolute certain, no. And there were prior parts of the evaluation process that negated it too. That's why keyword was always a myth once links and off-page factors ever became a part of calculations.

There's been a long tradition that longer pages (above 1,500 words in main content) do a lot better on average than shorter pages (less than 1,500 words).

There's some truth to this, and a great many different studies ran experiments and showed data to prove it beyond doubt. Except they got it all wrong.

You see, a big factor in why longer pages do better is that a lot of people are exceptionally lazy. When you write a short-ish article, people will read it all. Sometimes they won't agree with parts of it, or sometimes they do, but are so busy thinking about what they read that they don't immediately think to share it. But the freaky bit is that when you share a longer piece, many won't even bother to try to read it all, but they want people to think they did, so if they trust that the author has written something good, OR they trust whoever shared the link to it that they found, they are more likely than otherwise to just share it themselves, rubber-stamped.

Longer articles get more links and shares, in part because people *didn't* read it. Because it gets more links and shares, more people will see a link or share, and see that it is TL/DR for their tastes, and share it on themselves. Classic power law stuff.

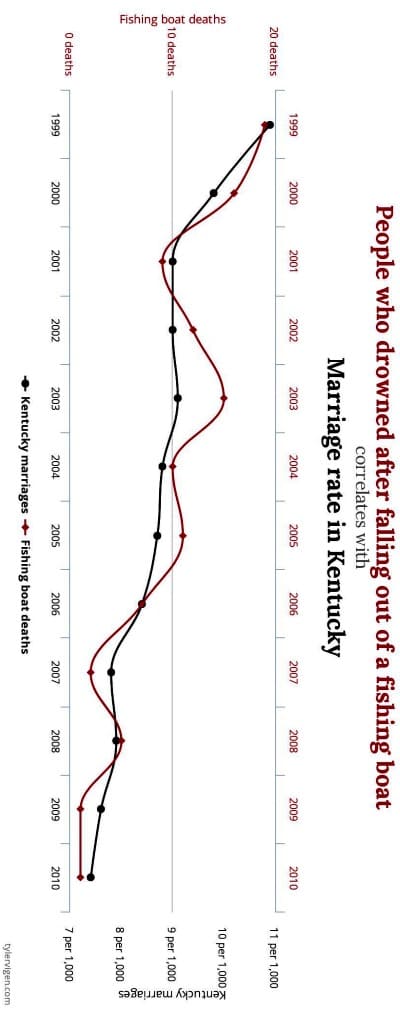

That's part of what was wrong with the studies – they didn't recognize the *why*. The other part was an even more classic error with statistical studies and experiments – selection bias.

For an awful lot of companies, especially all the main big brands, their homepage has very little text. Certainly less than 1,500 words of article content text. But if you search for "Amazon", the highest ranked and most powerful page they have will be the homepage, not any of the long long pages that detail how Amazon works, or its corporate structure, or how its affiliate program is. Trust me, they have thousands of long, wordy pages that talk in detail about Amazon, and the page that 'wins' is the page that doesn't.

Want to try a search for "Google" and see whether any of the million odd long posts or articles from the webmaster help, or the search central, or the FAQs rank above the almost wordless homepage?

All of those studies and experiments deliberately excluded anything that would make the results not come out saying just what they wanted it to say.

Intent matters far more than content. There are many times when a shorter page that gets straight to the information I need is a far, far better result than something where there's a billion facts and examples, and finding the one bit of info I want will take me 20 minutes of hard reading.

🤭👍🏽3

Truslow 🎓 » Ammon

Do you mean to say that data correlation doesn't necessarily indicate cause and effect? That's just crazy talk!

📰👈

👍🏽🤭3

📰👈

Eric » Ammon

While that's true to humans (the short bit that's straight to the point SHOULD be the ones humans want), Google, as you mentioned, surfaces the longer ones. I recently created a training on this and one of the examples was 'how to remove blueberry stains from a carpet' (or something like that). Instead of showing me ONE good answer, all the articles surfaced showed a bunch of methods of removing stains.

In that sense, it was the longer, "cover more of the topic" that Google rewarded as opposed to the shorter, straight answer from a cleaning expert. Of course, you're right, I didn't perform a detailed link analysis on all of them so it's very possible that the links aren't all equal (but then again, from a webmaster point of view, if those articles get links naturally, then those are the ones you should be creating!)

Ultimately, I agree with you however I'm on the side that Google's Artificial Intelligence (AI) looks for overall page relevance to the query (search intent, as you mentioned).

The more confident Google is that you're answering the query, the higher you'll rank. And when people create SUPER long articles, it dilutes the confidence level that the article is specifically about X question because longer articles tend to have a wider scope of content. Hence why he could be ranking higher with less words.

Ammon 🎓 » Eric

There is certainly strength in many, perhaps even most queries, in having broader coverage of the topic, more facts (Knowledge Graph plays in heavily to this analysis), talking about more of the related info, the background to it, etc.

All of those things will tend to correlate to a longer article, because concise writing, packing more information into less words, is one of the rarest of all writing talents, and even those with the talent often don't spend that extra time.

But that was the point I was making. It isn't the number of words, or the length of the article itself that caused the better ranking performance, the higher degree of quality and relevancy. It was simply that the things that do enhance quality, completeness, expertise, and authority, *tend* to also correlate (without being a direct cause or effect relationship), with longer pages.

So all those studies were pointing out entirely the wrong metric.

It's not at all unusual. Our industry has had huge arguments in the past, more times than I can count, over people confusing correlation with causation. And the broader sales and marketing field is even more famous for picking out awful KPI metrics.

I've always said that most of the time, KPI stands for "Kill Performance Instantly" because so many of them are chasing the wrong parts, the correlations, and in giving them a focus, taking away from what they actually correlate with.

The most classic example is in sales, where some manager will note that the top performer in the team makes more phone calls, and meets with more prospects. So they set a KPI and tell all the team they must make at least 50 phone calls each week, and go to at least 10 meetings with prospects. What happens in the real world then is that the team members get so concerned with having to set up so many calls and meetings, that they stop pre-qualifying *who* they call, and sometimes even do less follow-up because that's now not a metric. The result is that the whole team do about 40% less successful work.

Always, always know the difference between causation (something that always results in an effect no matter what) and correlation (things that tend to both be symptoms of, or related to,something else).

Anyway, to get back to Eliav's original question, there comes a point at which adding more words is either repeating the same facts, adding stuff with less relevancy, or otherwise not increasing the signals anymore, but instead, just making the page longer, slower to load, harder to skim for that one piece of info, etc. You absolutely can pass a point of diminishing returns on simply writing more.

📰👈

Despina » Eliav

Just curious if you had design and User Experience (UX)features to help users navigate the longer page easier. Things like table of contents, jump links, tabs or accordion widgets?

Just wondering if you tried anything on the UX side before culling the content too?

💟👍🏽3

Sure, we have TOC.

Keith L Evans 🎓

What You Really Need To Know:

It's more important to consider the layout and delivery of the page content.

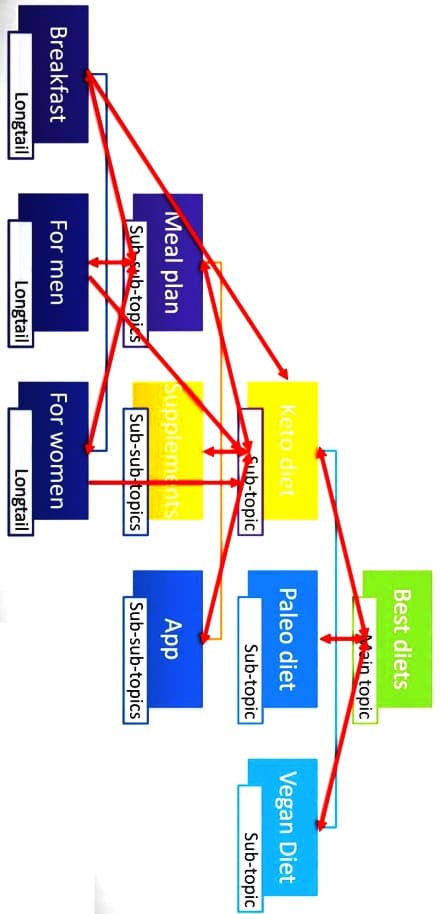

You need different pages for different queries with the ultimate goal of giving the user what they want.

Do they want video or more images? Will a drop down Frequently Asked Questions (FAQ) easily get the important concerns to the user? What about 2 column or 3 column? And or combinations of different page layouts so the user gets the ultimate experience.

Words are great. But jump links and easy, intuitive ways of delivering content is more important.

I have tested and re-tested the same amount of words for same query, but only change layouts. It's more than words, it's User Experience (UX)

💟👍🏽5

Have you done tests on this? Curious to know what UX features you've found to help the most in this situation?

Morgan

Thanks for sharing, good case study. There are more factors involved here that go beyond wordcount, which in itself is a metric I use less and less.

Search engines are still algorithms and I really doubt that one of the metrics they look at is wordcount. I mean if that would be the case we would only have huge articles over 10k words.

What really matters is keyword density, LSI / secondary keywords used, intent match, and just answering the question in a way that your user would want to have it answered.

I personally hate those 4-5k words affiliate articles, for example, that have the entire second part of the article (i.e. the buying guide) filled with fluff.

All good apart from 'keyword density' and 'Latent Semantic Indexing (LSI)

Keyword Density is a term SEO users made up to be able to talk in forums about how they were using keywords without giving away what the keywords were. It's actually one of a set of 3 metrics that had to be used together, in the same way one talks about real world objects in 3 dimensions of measurement.

The 3 metrics were Keyword Density, (a percentile of how many of all words on the page were those in the keyword phrase), Keyword Prominence (how soon in the text they appear), and Keyword Count (literally how many repetitions)

But it was never, ever, a thing used by any search engine, nor remotely close. It was simply a way for old school SEO users, back when on-page was all there was, to talk about doorway page design.

LSI is a technology that predates the web. It stands for Latent Semantic Indexing, and the absolute least important of the three words is 'Semantic'. Latent was very important, because it looked for things not written or even intended. The 'unconscious signals', never, ever, the words used. And Indexing is even more vital. LSI builds an entire index based on the relationship between documents and those latent signals. Add one single page (or simply update one) and you have to rebuild the entire index.

LSI is a powerful tool for something like a fixed corpus of documents where there's no more than about 20,000 documents at most. Great for indexing something like every letter ever written by Mark Twain, or all the diary entries of Winston Churchill – especially because it isn't like either are going to write any new ones on a regular basis.

It was never built for the web, was never suitable for the web, and was never used for the web. But sadly, it has the word 'Semantic' in it, and a lot of people who'd never heard that word before Semantic Search came along just latched onto the only patent they found that had that word in the title.

What search engines use for Semantics is a very good language model (NLP or Natural Language Processing). For intent they use aggregated click data, mostly at the level of what understanding of the user's intent helped the most thousands of users need less total clicks and time to be satisfied.

📰👈

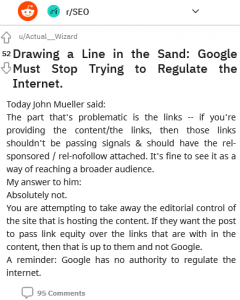

Does Google Penalize Duplicate Content?

Doesn’t Google implement anything in SEO that wasn’t mentioned in the Patent?

Does Google Crawl and Index Users Generate Content Sites like Quora and Medium Easier than Yours?

Google uses Natural Language Processing (NLP) instead of Latent Semantic Indexing (LSI) due to Both Different Patent Owners