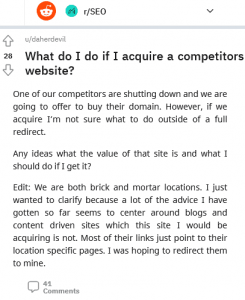

New client with about 50k pages (Groupon-like website selling coupons). Most (95%) of the pages are outdated offer pages (circa 2013-2015) but a handful still get quite a bit of traffic. I'd normally recommend retiring the old pages and inserting 301s, but I've read that having too many 301 redirects on a website is not good. Anyone had experience with large websites with outdated content? Thank you in advance to the best SEO group out there!

4 👍🏽416 💬🗨

📰👈

📰👈

Where did you read about 301 being an issue?

Let's pick that up a little:

A 301 is supposed to send a user that tries to visit a specific content on your website from an old pointer to a new one, where the content can be found. Why would that be an issue?

Now, there are different cases where this situation happens:

First, some page anywhere on the web (Search Engine Result Page (SERP) or any other web page) points to the old page. If it's a search engine, it will adjust over time. You could reach out to other webmasters to update their links too. However, that's not going to work in all cases. So, the 301 is good.

Second case would be you're linking to a page that's redirected internally. That's where you'd want to clean up the internal linking as too many internal links to 301 pages is not a good thing for the user experience. You can resolve that easily by pointing to the final target directly. It's a matter of link hygienic. A huge issue? Debatable.

Something I like to think but hasn't been tested out (maybe Ammon Johns may shed a thought) if you're redirecting permanently to a new page the crawler will pick that status up for the old page and if the new page hasn't been crawled at that time its a new discovery. But it aligns within the order of to be indexed pages among the other pages it already discovered before and therefor may take some time to really get to know that page. The bigger the site, the more likely crawlers need to prioritize. And then you've got a new page with just a few inlinks not being as high prioritized as other pages… Leading to delayed crawling, indexing and finally ranking.

Please excuse my crazy writing… Not a native tongue and that was hard for me to puzzle together in my brain 🤣

Please excuse my crazy writing… Not a native tongue and that was hard for me to puzzle together in my brain 🤣

Moiz

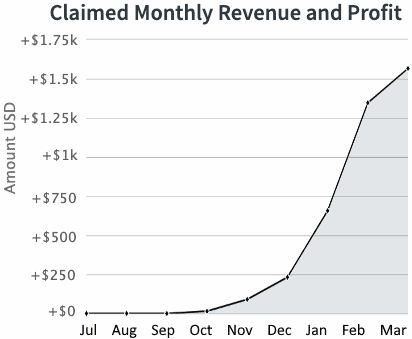

I recently did this, here is what I did and it worked. Keep in mind, my insights are from content pruning.

1: I filtered all the posts that had backlinks.

2: I filtered medium to high quality posts that had potential of getting traffic with on-page and added content.

3: I filtered the thin, and absolutely useless posts like old content.

4: deleted and redirected posts with backlinks to homepage – 410'd thin and bad content – unpublished medium to high quality posts.

Now the site only contains valuable and high quality posts.

What timeline was it? What were the results?

Ammon Johns 🎓 » Moiz

Many miss out on just how powerful pruning and refocusing can be. Glad to hear someone sharing how it worked.

Moiz

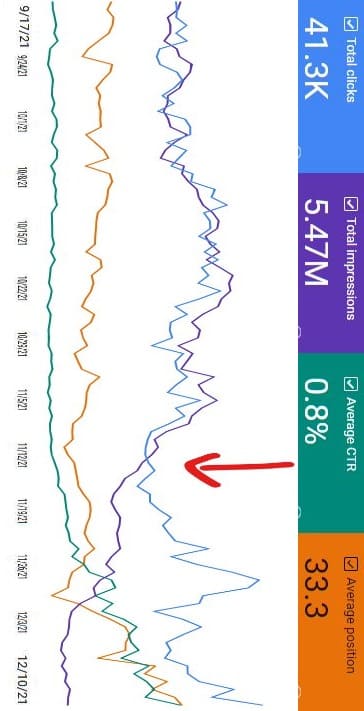

These are the results DanielAmmon Johns

Quite happy with how it turned out, I highly recommend content pruning. The site is now much faster as well, I deleted 6 years of Media Images that were attached to those articles.

📰👈

🤔2

Ammon Johns 🎓

Nice to see. And that clear point where the breakaway happens between the purple line of impressions and the blue line of total clicks is exactly how you see you pruned away the right stuff. Click Through Rate (CTR) that had been relatively flat for a long time rising very nicely as all the results not getting clicks were removed from the averaging.

More results from less pages, just as I said in my post a while back.

💟2

Daniel ✍️ » Moiz

Very cool. Thanks a bunch for sharing.

Ammon Johns 🎓

There are a lot of cases in Search Engine Optimization (SEO) where our perceptions of a thing are different to Google's on simple scale. Remember that no matter how big the websites we deal with, Google is a website who's database contains the entire internet, every link it knows to exist, PLUS an entire natural language system, with the Knowledge Graph on top of all of that. It is far bigger than the entire web, in that regard.

The way it looks at 301s is a lot different to the way an average webmaster, or even SEO, will look at them. And almost certainly it is also factoring in the duplicates, near-duplicates, and canonicals, at the same time.

So it is not so much the number of redirects itself, but more about the clarity of canonicalization, and the efficiencies that matter. For example, a redirect to a redirect to a redirect is bad efficiency for crawling and for indexing all those unnecessary middle-steps between the first link and the eventual destination.

The clarity side is simple – a 301 does not mean "I found a page vaguely similar but different". It means "the exact resource you asked for has a new address and here it is". Don't use a 301 redirect to link to the 'closest equivalent' if it does not offer exactly the same thing to the end user.

If a product, service, or offer is no longer available, and you use a 301 redirect to push users to the category page to see the latest offers, that's not a technically correct use of a 301, and it may affect Google's 'trust' signals of your site as a whole. Get enough of those trust strikes against you and Google will start ignoring a lot of the explicit signals you send – everything from your idea of categorization and ontology, to your use of titles, link attributes, etc.

By all means though, if one offer, product, or service, has been replaced by another, a true equivalent, latest model, or upgrade, then that is an applicable and correct case for a 301, although even there, a page for the old offer that instead links and explains the change, the upgrade, is even better – it avoids user confusion if there is something different about the new to what they'd expected to find.

Remember, if a page has inbound links that have value, then keeping it around, updated to say that the offer expired, and with links to the new closest equivalents or the parent category, is fine and dandy. It has the links to give it value, and you'd not be linking to that expired offer on your own pages (so if the link has no value it becomes an orphan page and doesn't affect your PageRank at all).

Google don't care how many pages you have in the database. They have the entire web, the semantics of every language they can process, and the knowledge graph in theirs. What they care about is mostly a page by page analysis of the value of the page to a search user. Would including this URL in a Search Engine Result Page (SERP) improve the quality of the SERP to one of their customers, or better, to hundreds or thousands of their customers?

Don't be too afraid of losing some of those old links to old expired offers. Pages linking to expired offers where the webmasters don't care if the links are still valid are pretty low value links in the first place. Quite often of zero value, or getting you co-citation values with other expired stuff.

Amazing value right here. Thank you so much for taking the time to provide your insight. I am now a lot more confident to pursue a strategy of auditing which of the old offers are getting traffic, and which we could confidently kill + 301.

Btw, the pages that contain the expired offer already state that the offer itself is expired. It would still make sense to remove the completely dead ones though, right? To conserve crawl budget?

Ammon Johns 🎓 » Daniel

If there are no third-party links that bring any added value, then killing those pages off is always right. A 410 with a custom error page lets you handle this gracefully for anyone that had somehow bookmarked it, or found a link to it no tool had, which can explain that offers expire, and this was one of those, but you have plenty of fresh ones (and a category link and homepage link to encourage them to look for some. Heck, offer them the signup to your alerts or email list so they don't miss out again.).

Incidentally, 'Crawl Budget' is one of those perspective things itself, in that Crawl Budget is how we at the receiving end perceive the effects of Crawl Prioritization – But Google engineers will flatly deny that there is any such thing. Yet the same people will happily talk with you at length about Crawl Prioritization.

To Google, the word 'Budget' would suggest there is a fixed limit, a set value, to what Googlebot will crawl, and that's not how they see it. If there was infinite time in a day then Googlebot would eventually crawl every known URL in that day.

It's simply that in the real world there comes a point at which enough higher-priority URLs get added to the list that Googlebot never run out of those ever-replenishing higher priority items to seek lower-priority pages. But if there was a sudden quiet week for news, big events, and major brands publishing, they absolutely would.

Petter

Massive redirect lists is more a configuration issue on some servers (some have caps for how much info you can add to handling) or a Content Management System (cms) issue (as CMSs also can have caps). There's also the server hardware and software issue when the table of handling increases in size (if the rule is to check redirect list prior to fetching the HTML document – a big list means longer TTFB).

But having worked on similar sites – there are ways to handle this that doesn't blow up your redirect list. If the template editor in the cms has fixed fields for how long the coupon will be available – then you can set expires http header to correspond (telling search engines the final date this content is "of value"). Then once that date hits – you can noindex the page – and change the page title to something easily recognizable to catch backlinks, and change the content to include e.g. a new hero image that says the coupon have expired followed by a "people also looked at" list with active coupons that are similar. After 6 months, you can automate a check vs. Google Analytics (GA) data (or server logs) to see if the page has any traffic, and if it does, then email you (or someone else handling) to check this in GA (and build reports that you check in on from time to time), and keep it until a 301 can be set if that's needed. If it doesn't have any traffic then you can delete it (with a custom 404/410 page), or perhaps if you have a good folder structure (all coupons from 2013 are in the /coupons/2013/xxx/xxx style page paths), then you can perhaps also add a catchall rule for /coupons/2013/* to redirect to the main folder which you change into a similar thing (hero image saying the folders expired, look at some of our coupons that are available today).

Just to clarify, the page title change is so that you can set up a datastudio table which can easily track traffic to expired posts alongside referrer info. That way you can quickly identify links you may want to update, and you can also identify 301s you want to support for some time. You can also set alerts from GA for "Google / organic" traffic to "page title match" pages, so that you can know which pages to send to googlebot via Google Search Console (GSC) for deindexing e.g. once a week.

📰👈

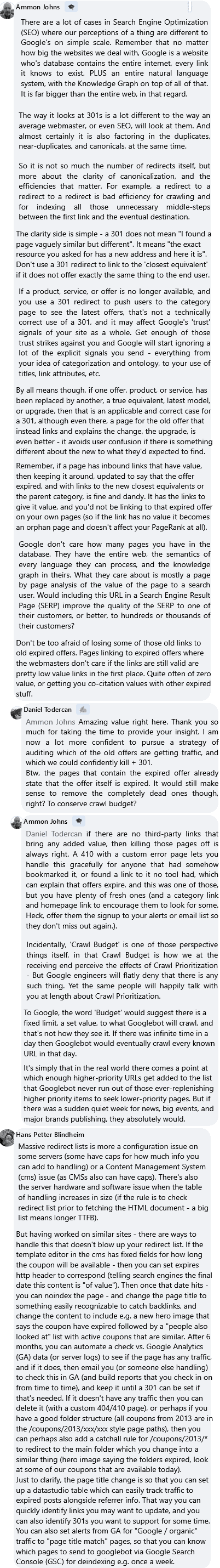

An SEO Case Study on My Reverse Content Post Clustering

Ammon: Google don’t count Words or Keywords, but they count Page Speed

Disavow spammy links, Build a GMB and Expertise, Authoritativeness, Trustworthiness (EAT)

Doesn’t Google implement anything in SEO that wasn’t mentioned in the Patent?

Is Crawled Currently Not Indexed Will Never or Hard To Show because It Only been Archive?

The Most Important Tool in Search Engine Optimization (SEO)

Google uses Natural Language Processing (NLP) instead of Latent Semantic Indexing (LSI) due to Both Different Patent Owners